PHASE GARDEN

Intro

We asked ourselves “what is the Solstice” as a phenomenon, an observation, a cultural-scientific event. Even from that starting point the piece began to appear to us - as we imagined zooming out from our perspective on planet earth in the northern hemisphere to a decidedly “other” perspective, the elegant, overlapping rhythms of the bodies at play became hypnotic to our imaginations.

We are rhythmical creatures, the day and night rhythms that dominate our lives are perhaps the purest example of this - and yet they change so slightly. This phasing of observable rhythms (however slightly they overlap and phase) is of course core to the phenomenon that is the solstice - or the “sun stands still” moments of our solar journey. Observing a moment, the solstice for example, and then predicting it, is a feat of measurement, patience and theories. As philosopher and theorist Karen Barad puts it “The nature of nature depends on how you measure it.”

The solstice is “a thing” when one observes it. The rhythms are so relatively slow that coalesce in the event that we rely on calendars, astronomers and of course the religious institutions that co-opted the season to announce its arrival. Our interest is to create our own description, measurement and expression of many of the combining rhythms that produce the solstice event through light and sound.

Thesis

Play with, hold and feel in various ways the cosmic rhythms that contribute and even define our sense of time. Create a system of logic that derives our creative palette directly from the orbital and rotational phenomenon of our planet, its moon and the sun. Allow ourselves to play with these waves in various ways; in combination and at a condensed scale. Finally, presenting this description in a manner that allows a participant to freely move about in an immersive, multi-channel environment in a natural setting. How do we map time, and how do we describe a solstice?

Experience

As artists working in sound and light, and specifically as musicians, our fascination with rhythm and its ability to seduce us into experiences is deeply at play in this artwork.

This artwork could be formally considered an installation - it is not a performance nor is it concertized - but it is an evolving, ever-unique audio-visual scored experience. The work is situated in a 230’ diameter circular garden. The concentric rings of the gardens’s landscaped areas and walking paths are conducive to a strolling experience and we embraced this design. We chose to place 12 truss towers at regular intervals along the middle pedestrian path, roughly 36’ apart. Each tower contains a loudspeaker, a number of fixed color-changing lights, and is topped with a large color-changing moving light.

Therefore, the sound system is a 12.1 channel system, with each tower holding one of 12 channels plus a subwoofer. The lighting instruments are all individually addressable and the moving fixtures are dynamically controlled as part of the same governing logic that drives the entire piece.

An illustration of Asa Grey Garden from above.

To begin, we chose a number of periodic events that are relative to the solstice phenomenon from our unique perspective as humans on planet Earth. We used the “real year” or “one solar unit” as the basis for our periodic relationships - in other words, from one winter solstice to the next.

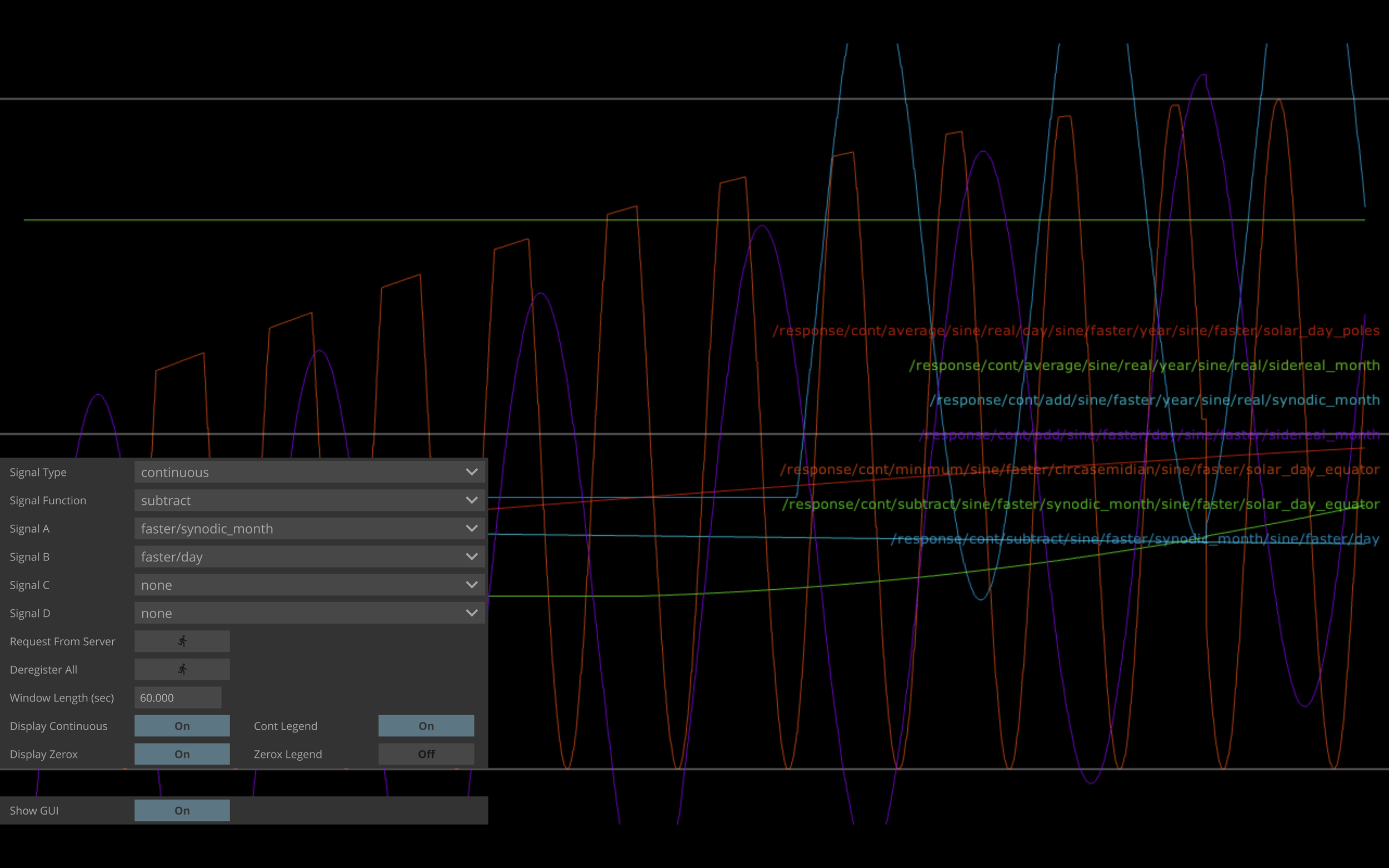

You will notice the “real” and “faster” categories of time scale in the chart above. “real “ is just that - actual event period (one year is winter solstice to winter solstice), etc. We created a condensed, relative version of these periods as well, compressed to one hour. This decision is one of a handful of scale and combinatory filters we employed in our effort to not only describe, but make available the description of the solstice and its related events. Additionally, we incorporated the option to combine these periods using various functions; adding, subtracting, dividing, multiplying, average, minimum and maximum. We argue these combinations not only serve the piece (serve the composers, to be fair) by introducing periods that dynamically evolve over time, as well as new and varied period shapes, but also their combination through function might approach what is perception of various period phenomena. Our view is that we never actually observe or experience one of these periodic events alone - we are always experiencing our circasemidian rhythm, while being aware of the time phase of the month (lunar position), as we discuss the season (solar year). We are experiencing combinations of waves in perception, as well as physically and therefore the functional combinations of waves felt relevant to the work.

As we studied these cycles we needed to observe them to discuss and imagine how we might make an experience-driven artwork tangible. We wrote a simple piece of software to “scope” any of the aforementioned wave and their combinations in real time. For this observational tool (as well as for the resulting artwork) the waves are all generated with exact relativity to the actual solar / planetary events. In other words, when the “day” period strikes noon, that would be scoped as a zero-crossing in our read-out.

A screenshot of the wave visualizer.

Over 1000 wave combinations are available using just this already restricted set of options. Through our compositional process, we ultimately chose about 3 dozen waves to reference as the driving expressions in our work.

Each of these selected waves (and combinations of waves) are generated by a central server for the artwork and made available to both the lighting interface and sound interface simultaneously. Our process of aestheticizing these cyclic phenomena therefore took the form of choosing a wave, assigning it to a sound source and pairing it with a lighting event. We additionally assigned wave periods (rates) to the orbiting of these wave-driven triggered events as well - therefore creating a “panning” or “orbiting” series of sound and light expressions across (around) the 12-channel tower system.

The melodic pitches selected, as well as the color palette, were derived as well from the relationship of the waves used in the project. Using the ranges of audibility and visibility as the high and low parameters, we derived our pitch and color palettes accordingly. In the case of the sound pitches, we admit, we did use the closest tempered-note values to the wave-derived values. After creating a frequency-true scale based on the wave relationships, it was clear that, in scientific terms, it sounded like shit. We decided that the effort of the project was a literal description of the planetary events (not simple data visualization) but rather a poetic experience, and therefore preferring consonance in pitch as we generally recognize it in western music was acceptable.

In other words, we took this literal translation of the waves to pitches:

And simplified it to this for our scoring (and your listening) pleasure:

Similarly for our color palette we derived a 14-hue set and chose from these as our thesis-derived interpretation and selection of values for the lighting events.

The particular sounds triggered (instrument, texture, treatment, etc) and the envelopes of the events (attack, decay, sustain, release) are all managed by the composers through the software systems employed for expression

Technology

Our “playback” system is actually a real-time generating system. Nothing is fixed for playback, other than the sound textures, colors and wave assignments pre-determined in our compositional process. Onsite at the installation is a server generating the waves requested in real time, and our sound and light generating systems are subscribed to this server and producing the sound and light events in real time throughout the installation period. In this way, the artwork is not fixed, it is generative and unique in every moment. Any coincidental events, melodic combinations, and rhythmical passages are unique to the moment they are observed in.

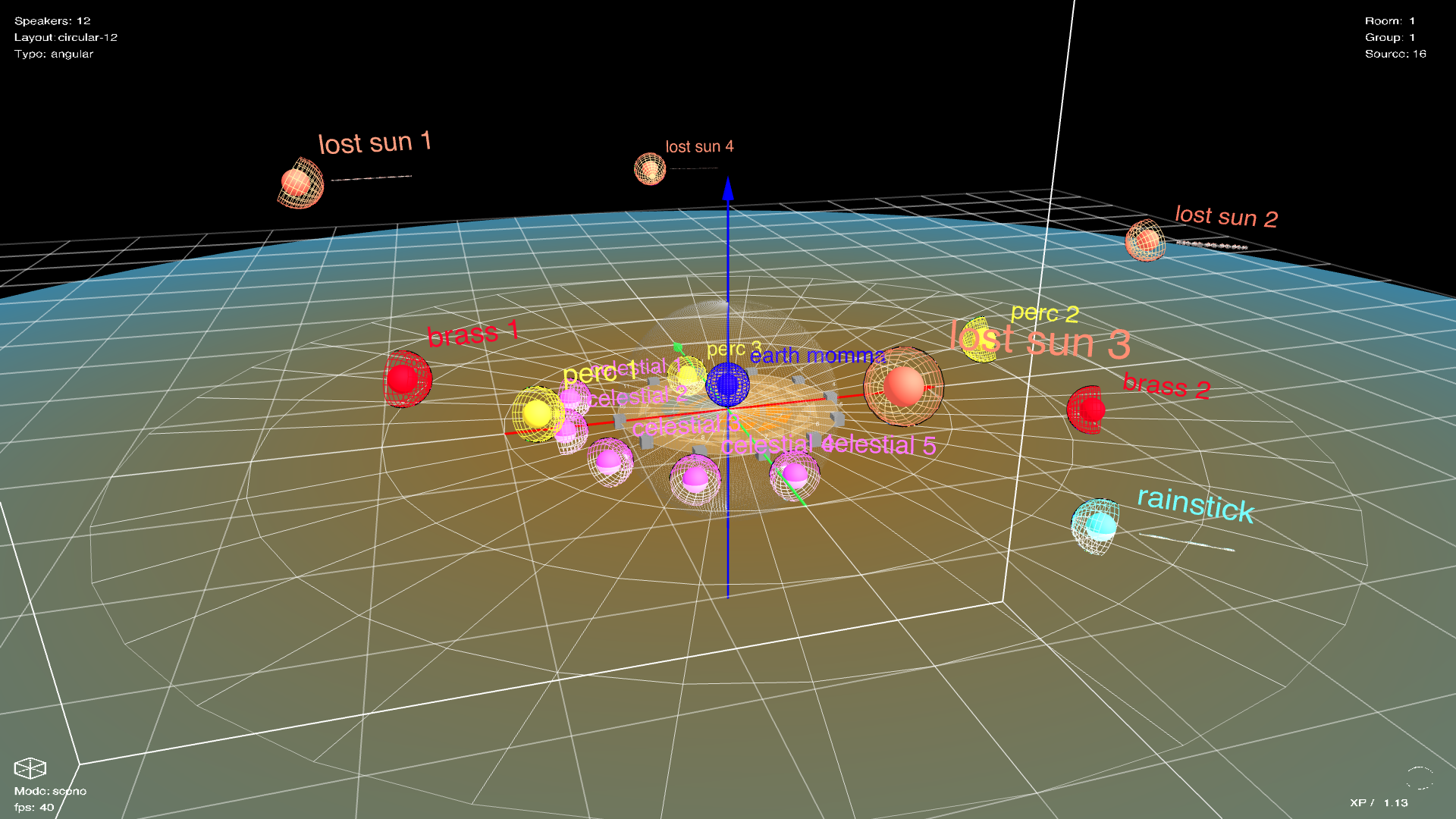

Sound - we employed Ableton Live as our central “instrument” for sound production and we used teh XP.4Live suite of software for our spatialization needs. In Ableton, we built a number of custom instruments including samples from the cemetery site and new recordings of instruments, environmental sounds, software synthesis, and more to create the sonic atmosphere. The XP.4Live suite proved wildly interesting and allowed us to not only sculpt a sonic environment driven by our tower placement in teh round, but also incorporated a host of additional ambisonic and spatialized algorithms for our exploration. Crucially, the XP software features a dynamic, customizable visualizer that aided our design process immensely.

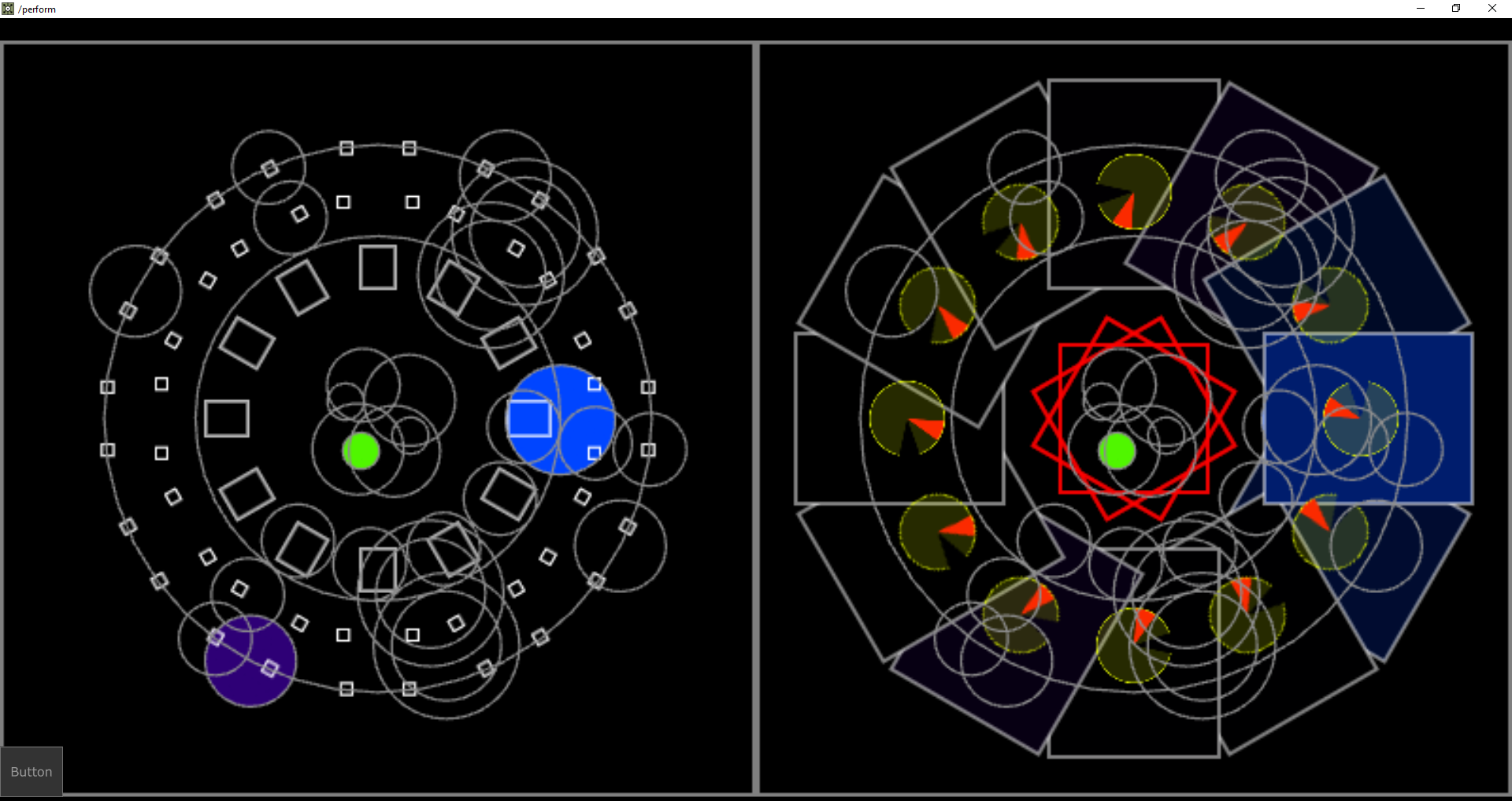

Lighting - Our team opted to create a custom patch in Touch Designer (TD) to visualize and trigger the lighting events for the piece. This system allowed us to manage fixture placement and observe illumination in time with the generated waves, while we retained flexibility in how we might tweak the orbital and durational lighting effects. As well, we needed to determine a system to score for the moving head lighting fixtures that was true to our system of reference wave-generated audio-visual expression - and Touch Designer allowed us to create a logic with great expression and functionality to achieve this goal. The system seen below is the rendering of our interface that depicts the wave-driven timing of an orbital event (panning), and when the trigger is pushed to the system, an illumination of the ring translates, through pixel sampling, to DMX which is readable by the lighting fixtures, all at their predetermined addresses.

Screenshot of the lighting visualizer.

Wave generation - As mentioned above, we wrote a simple software patch to observe in real time the position of each of the desired events and where they are in their period specifically at any given point. This same software can also combine as requested by function one or more waves and make these available to the subscribed sound and light rendering systems (Ableton and TD). This script is running in Python and produces OSC messages that are read by the aforementioned machines.

New Problems, Process evolution

Composing in this manner, even when employing clever workarounds, most often involved waiting. Patience for combinations of events and surrender to the system we chose became necessary. Typically, in fixed and performed situations, we can play our instruments, write down notes, or instruct the computer to play back midi or other sequential events at our command. In the case of Phase Garden, we found ourselves waiting often for events to unfold, to play out over minutes or in some cases hours. We would often be surprised and at times delighted in what we heard.

As is the case with many large scale artworks, we needed to prototype carefully and scale towards our installation plan. This means that before installation we have yet to hear or see our artwork on the system or in the site it was built for. Thankfully we have a few days of build, test, mix and tweak before the public arrives - but we are largely creating and designing for an environment we are only able to imagine.

Photo by Aram Boghosian